on this page

what are containers?what is docker?building an image from a docker filevolumesoptimizing our containerwrapping upYou've just finished writing the code for an exciting new application and you're ready to share it with the world. You remember that before you can launch your new application, you have to do your due diligence, and test the application to make sure that it works as expected. You then write and complete the unit tests, integration tests, and even end-end tests and find that everything passes. You even go as far as testing the application on a friend's computer and find that everything seems fine. You now have confidence that your application is ready to be deployed to production servers and so you begin the process of deployment.

A few moments later you realize that your code is not working on the production servers. Confused as to why the code is not working in production, you begin diagnosing the issue and after a dreaded few hours, you find that your code is incompatible with the version/run time environment on your production server. The version of the run time environment between your computer and the production servers differs on a level that prevents your code from executing on the server. Excited about finding the issue causing this setback, you quickly upgrade the production environment and successfully deploy your code. Your application is now out on the interweb for everyone to enjoy!

I'm sure many developers have had a similar experience to the one described above but interestingly it's a situation that some developers won't experience until it comes time to deploy their code to a production server. To clarify, I'm not speaking of applications that take advantage of services that offer what I call one-click deploys -- deploys that occur directly from your git-repo. While these services are very useful and make it easy to deploy an application, they may lead to vendor lock-in in the sense that it becomes difficult to move an application from one platform to the other. Rather, I'm speaking of applications in which we want to retain this autonomy and have the freedom to move to another platform with very little configuration. I'm also speaking about applications in which developers need the flexibility to build, ship, and maintain code at scale.

Note

It's important to note that this programming concept is highly dependent on the needs of the application, therefore, not all applications require this approach. Containerization is an approach that developers opt into as their application grows.

To achieve this, we need some way to package/containerize an application so that when we move an application from one environment to another, the application has everything needed to successfully run/execute. There are many ways to replicate the environment that your code currently runs in but of those methods containerized environments have become very popular.

What are containers?

Containers are packages of software that contain all of the necessary elements to run an application in any environment. In this way, containers virtualize the operating system and allow for software to run anywhere -- from a private data centre's to public cloud infrastructures.

Let's imagine that your application was the orange box above and it had dependencies that were essential for its operation -- things like a database layer, and having the correct run time environment installed. When setting up a production environment or another development environment, you have to be aware of these dependencies because if they're not set up properly then your application won't run. Think of the green box as the container that contains your application and all of its dependencies. Now when you set up a production environment or another development environment, you simply copy the green box and now you have confidence that the new environment has everything needed to successfully run your application.

Okay, this is cool and all but how is it the case that we can take a container move it to another computer and still have our application work as expected? Granted, we established that containers allow us to package all the dependencies needed to successfully run our application but how and why does it work?

We spoke a bit earlier about how virtual machines allow us to virtualize hardware, well containers have similar properties as they allow us to virtualize operating systems. From the point of view of the application running inside of a container, the container itself is seen as the operating system. Therefore, these containerized applications are unaware of the outside environment.

Here we have two different sandbox environments and you can think of these as containers for an application. Each of these sandbox environments has elements inside that can be manipulated/moved around but these elements are isolated to the container they're in, therefore, are unaware of their outside environment.

You can drag the elements in each container around.

Sandbox 1

Sandbox 2

From the point of view of the computer, containers are just another running process on the computer. For this reason, there is now the possibility of having multiple instances of the same container running on your computer. Similar to how you can have multiple browser windows open at the same time, you can have multiple containers running at the same time and they will not interfere with one another.

Pop Quiz!

Question: A container is the following:

Imagine that you've just launched your application and after a few days it starts to gain a lot of popularity. Your server needs to be able to handle the increased traffic, therefore it needs some way to scale your application. This can either be done 1) vertically by improving the compute hardware in which your application is running or 2) horizontally by creating multiple instances of your application. The former is most often done by using virtual machines, while the latter is done by creating new containers.

This sparks the question of how do we even begin to create a containerized application.

What is docker?

Docker is a set of platform-as-a-service products that help deliver software in containers. It was developed by Solomon Hykes in 2013 and it has become the most popular tools when packaging applications and preparing them for another environment. Docker enables developers to pack applications into containers that run as instances of docker images thereby making it easier, and safer to build & manage applications.

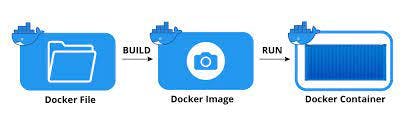

The overarching dogma that streamlines the process of creating/managing containers is outlined in the diagram below.

Developers write a docker file which outline the instructions on how to build docker images. An image is a multilayered template file used to construct docker containers and these images include things from application code to libraries, tools, dependencies and any other files needed to make the application run. You can think of images as a snapshot of a virtual environment at a single point in time thus images are said to be immutable. Although an image cannot be changed, it can be duplicated, shared and deleted. Docker containers are virtualized runtime environments used to create, run and deploy applications that are isolated from the underlying hardware. As we've talked about earlier, containers virtualize the OS but they also share the underlying kernel thus are very lightweight and can be created and destroyed relatively quickly.

Building an image from a docker file

Let's try to create a docker image by writing our own docker file. Let's say that we've finished building our node server using express and we want to dockerize it before deploying it to a platform of choice.

We first need to install Docker Desktop, a desktop GUI that allows you to build, interact, and share your docker containers.

In the root directory of your project, create a docker file. This docker file does not have an extension and is simply named Dockerfile. The first line of every docker file specifies the base image from which the new image for your application is going to be built -- Ie/ the starting point of the image for your application. I say this with caution because you have the flexibility to start from any point; meaning that you could very well start with just the operating system.

The gotcha about docker

It's awesome that containers share the underlying kernel with the host machine

as it makes containers very lightweight and speedy. However, this fact creates

a "gotcha" in that you can only install containers that are of the same OS

as your host machine.

You can only install and run a Linux container on a Linux machine. Conversely, you cannot install and run a windows container on a Linux machine

Knowing that we are trying to containerize a node.js express app if we were to go down this route (starting from just the OS), then our next course of action would be to install node inside our container.

Luckily, programmers are deliberately lazy✌️, therefore, there are public registries for popular docker images from which you can start your build. There are many public registries, but the main one is Docker Hub.

So we can now start from a node image. Notice that I can specify the version of node that I want to use by appending a :16 -- this is saying that we are going to be using version 16 of node in our image. Similarly, saying node:12, indicates that we wish to use version 12 of node.js in our image.

./Dockerfile

FROM node:16-alpineBy default, all files are dumbed into the root folder so to have some organization we will need to create a folder for our application code. We do this by setting the working directory -- if the directory we wish to switch to does not exist then the directory will be created. Here we are setting the working directory to /app.

./Dockerfile

FROM node:16-alpine

WORKDIR /appWe now have two options, we can

- Copy all our source code into the container, npm install our dependencies and start our server

./Dockerfile

FROM node:16-alpine

WORKDIR /app

COPY . .

RUN npm install- Start by just copying the package JSON into the container and then installing the dependencies before copying the rest of our source code into the container

./Dockerfile

FROM node:16-alpine

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .There is a difference between the two approaches and in short option 2 is the preferred method. Previously we talked about how a docker image is a multilayered template file used to construct docker containers.

Multilayered is the important term here as each command in a docker file creates a new layer. Docker attempts to cache each layer as it is going through the build and will only recompute/re-run the layer if there is a change between builds. Using option 1 means that with every change to our source code docker is going to copy and re-install our node packages in addition to the source code. This is inefficient and leads to longer builds, therefore, we may want to opt into taking advantage of caching. This means that the docker container would only copy and re-install the dependencies when the package.json changes. Therefore, option 2 would be the most ideal when writing our docker file.

Now that we've successfully installed our dependencies and copied our source code into our container, we need a way to expose a port on our container so that we can connect to our express app inside the container. Although we've told express to be listening on a certain port for HTTP methods, that port is currently not available outside the container. Therefore, we manually tell docker to expose a port. Additionally, by default and for security reasons, there are no exposed ports on a new container so all the more reason why we manually have to set one. We do this by using the EXPOSE keyword.

./Dockerfile

FROM node:16-alpine

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 5001We finally have to start our container using the CMD instruction and there can only be one of these per docker file. It tells the container how to run the application.

The CMD instruction is structured as an array of strings where each string is a command in the terminal. For our express app, it would look something like this

./Dockerfile

FROM node:16-alpine

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 5001

CMD ["npm", "start"]We now have all the instructions to completely build our docker image. We can now run the docker build command. The -t flag indicates that we want to tag our build so that we can make it easier to find and run our container.

docker build -t demo/express-docker-app:1.0There are a ton of other flags that can be used during the build process and they can be found here.

Now that the container is built, you can either push it up to a container registry like dockerhub or we can run the container locally. We can do this by using the docker run command followed by the build id or the tag name.

docker run demo/express-docker-app:1.0We also have to port forward all our requests made to the open port on our host machine to the open port on our running docker container. We do this by supplying a port binding flag -p in our docker run command.

docker run -p 5001:5001 demo/express-docker-app:1.0The number on the left is the port on the host machine, while the number on the right is the port on the docker container. host machine port: docker container port.

We now have a running container!

Pop Quiz!

Question: Why is it important to properly map ports when using a docker container?

Volumes

It's important to note that containers are stateless, thus when a container is destroyed, any state or data inside the container will be lost. However, there may be situations where you want to store data that is created inside the container, and we can do this with volumes. A volume is just a dedicated folder that is created on the host machine, and containers can have access to this volume to read and write data.

To create a volume, we use the create volume command inside our terminal

docker volume create db-volWe can then mount the volume inside a container when we run the container

docker run --mount source=db-vol,target=/dbOptimizing our container

Security is a really big part of containerization and plays a key role in answering our question of what it means to containerize an application. We have to remember that we are virtualizing an operating system, therefore, like all other operating systems, and dependencies, containers are also subject to vulnerabilities. Therefore, it's important that we think about and implement measures to protect against these vulnerabilities.

Earlier, when we were creating our docker file, we were speaking about how we could add flags in our docker file to specify the version of node we wanted to use. You may have also noticed an additional flag that read -alpine. The -alpine flag specifies that we want to use the -alpine distribution of Linux; a much smaller Linux distro.

Slimming containers

Slimming containers is the process of optimizing the size of the container to ensure that the container is built with only the essential components necessary to power the application.

Using just the latest node image in our container, we are incurring an image size of ~353 MB, however, if we were to use the slim version it would be ~76 MB. Note, those are only base image sizes and do not include our application. However, using the smaller base images contributes to producing an overall smaller image for our application.

Using this flag creates a much smaller image for our application, but more importantly, it reduces what is known as the attack surface -- a summation of potential entry points for an unauthorized user to gain read/write access to our environment.

There are other ways in which you can slim down your docker images:

- Only install packages that you need

- Use multi-stage builds

- Use a

.dockerignorefile - Take advantage of caching

- Use tools such as dive or Docker Slim to inspect your docker images and containers

Wrapping up

Containerization is an approach that makes it easier for developers to build, ship, and maintain applications. Having said this, I think it's an approach that developers should think about and implement as the size of their application grows -- it's not something that developers should implement at the start of the application process.

We spoke earlier about how implementing containerization is application dependent, and thus concluding that not all applications need to be containerized. It may be easier to implement a one-click deployment strategy with services such as Netlify for static websites. However, in cases where there's a more involved process of creating web services and/or applications, developer's may benefit from implementing containers as it provides a ton of flexibility to the developer and/or the team.

Nonetheless, these decisions are left up to the developers or the development team to ponder!

All right, I'm going to wrap it up there! Hope you found this useful, and I'll catch you in the next one... Peace!